Are you excited about how Salesforce protects your data with new generative AI tools? Salesforce is committed to building generative AI tools that help customers work smarter while prioritizing security and trust. To ensure the safe and secure use of generative AI, Salesforce has created the Einstein Trust Layer. It’s designed to help you, and your team use AI confidently within your organization. Let’s explore how Salesforce is setting the standard for secure generative AI.

What is the Einstein Trust Layer?

The Einstein Trust Layer enhances the security of generative AI with built-in data and privacy controls. These controls ensure Einstein delivers AI grounded in your customer and company data without compromising security.

The Trust Layer provides secure access to various large language models (LLMs) within and outside Salesforce. It acts as a protective barrier between LLMs and your employees or customers, enabling secure use of generative AI across business applications like sales emails, work summaries, and customer service replies.

How does the Einstein Trust Layer work?

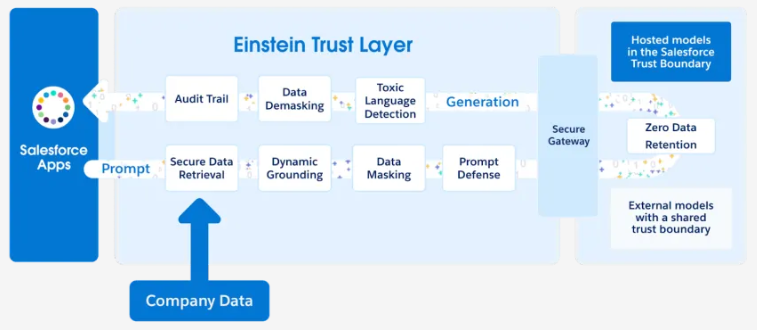

The Einstein Trust Layer lets you benefit from generative AI while safeguarding your data. The following diagram illustrates the Einstein Trust Layer process, showing how Salesforce ensures data security when using generative AI.

Source: Salesforce Trailhead

Here’s a breakdown:

1. Salesforce Apps: Prompts originate from Salesforce applications, leveraging Company Data.

2. Einstein Trust Layer: The trust layer incorporates several features:

- Secure Data Retrieval: Only accesses relevant, secure data.

- Dynamic Grounding: Bases AI outputs on specific, approved data.

- Data Masking: Protects sensitive information during processing.

- Prompt Defense: Adds an extra layer of validation.

- Generation: AI generates responses using secure processes.

- Toxic Language Detection: Ensures prompts and responses are safe and appropriate.

- Data Demasking: Securely restores masked data in responses, ensuring accuracy and personalization while maintaining data protection.

- Audit Trail: Tracks each prompt’s journey for accountability.

3. Secure Gateway: Acts as a protective barrier between the AI models and the user.

4. AI Models: It includes models hosted within the Salesforce trust boundary or external models with shared trust standards. Zero Data Retention ensures no data is stored post-processing.

This process guarantees safe and trustworthy AI use for sales emails, service responses, and more.

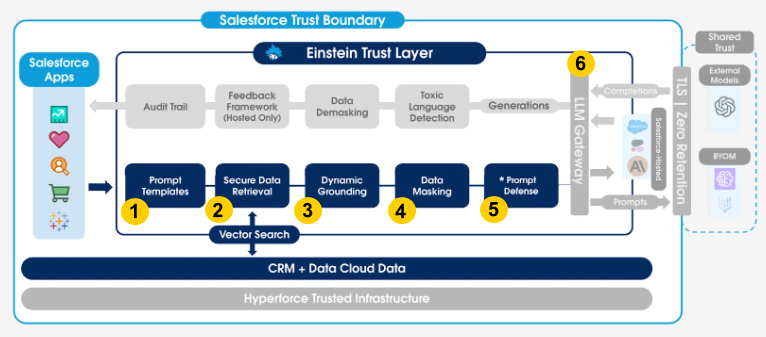

Let’s split the data journey through the Einstein Trust Layer into two: The Prompt Journey and The Response Journey and explore each feature in detail.

The Prompt Journey:

Source: Salesforce Trailhead

What is a Prompt?

A prompt is an instruction or input provided to a generative AI system to guide its response, including context, constraints, or specific details for the desired output. Clear instructions, relevant context, and constraints make prompts effective, leading to high-quality AI responses.

Key Steps in the Prompt Journey:

1. Prompt Template Selection:

Salesforce offers pre-built prompt templates tailored for various business needs to simplify the prompting process. When a Salesforce app sends a request to the Einstein Trust Layer, it uses a relevant prompt template. Before reaching the AI model, Salesforce safely populates these templates with customer data and resources.

2. Secure Data Retrieval:

Ensures that only authorized and appropriate data is accessed from the CRM od Data Cloud, respecting all data permissions and privacy rules in Salesforce.

3. Dynamic Grounding:

Dynamic Grounding customizes a generative AI prompt with relevant, real-time data and ensures the AI provides accurate, context-aware, and personalized responses. In addition, this involves securely retrieving and populating a prompt template with data from your organization’s systems, such as customer records, case details, or relevant knowledge articles.

For example, in a customer service scenario, the Dynamic Grounding feature might populate a prompt template with:

- The customer name and account information.

- Case issue details.

- Relevant knowledge articles to assist in solving the problem.

4. Data Masking:

Data Masking is a security technique to protect sensitive information by replacing it with placeholder values, such as tokens or anonymized data. It ensures that private data, like names, addresses, or credit card numbers, is not exposed to unauthorized systems or users while still allowing processes, like AI generation, to work within the context of the information. Moreover, it helps organizations adhere to GDPR or CCPA data protection regulations.

How Data Masking Works:

- Identification: Sensitive information, such as customer names, phone numbers, or account details, is identified within the data.

- Tokenization: It replaces each sensitive value with a token or placeholder (e.g., “[Customer Name]” or “[Credit Card Number]”).

- Retention of Context: The placeholders maintain the context and structure of the data so that AI models or other systems can generate appropriate and relevant responses.

- Reintegration: After processing (e.g., generating an AI response), the placeholders can be replaced with the original values, if necessary, but only in secure environments.

Example:

In a customer service scenario, if an AI model generates a reply for a customer issue:

- Before Masking: “Dear John Smith, your account number 123456 is being reviewed.”

- After Masking: “Dear [Customer Name], your account number [Account Number] is being reviewed.”

5. Prompt Defense:

Prompt Defense is a safeguard mechanism to protect generative AI systems from producing unintended, harmful, or inappropriate outputs. It acts as a layer of protection by adding guardrails and specific instructions to the AI model, ensuring that its responses align with the intended use case and do not compromise security or trust.

Key Functions of Prompt Defense:

a. Preventing Malicious Attacks:

- It protects against prompt injection attacks, where bad actors or even well-meaning users attempt to manipulate the AI to behave in unintended ways.

- Ensures that AI does not generate harmful, confidential, or inappropriate content, even if a malicious or misleading prompt is submitted.

b. Setting Behavioral Boundaries:

- Includes explicit instructions within the prompt to guide the AI’s responses (e.g., “Only provide answers based on available data”).

- Ensures the AI avoids topics or actions outside its designed purpose, such as offering advice in areas where it lacks expertise.

c. Mitigating Risks of Hallucinations:

Reduces the chances of the AI fabricating information or creating biased, harmful, or irrelevant responses.

d. Improving Accountability:

Provides an audit trail to track how AI handles the prompts and responses, ensuring transparency and enabling debugging when issues arise.

Example in Action:

In a customer service scenario, Prompt Defense might:

- Restrict AI from making financial recommendations it is not trained to handle.

- Add safeguards to prevent the AI from generating sensitive or inappropriate responses, even if the prompt asks for them directly.

- Provide instructions like: “If unsure about the query, do not generate an answer and instead recommend escalating to a human agent.”

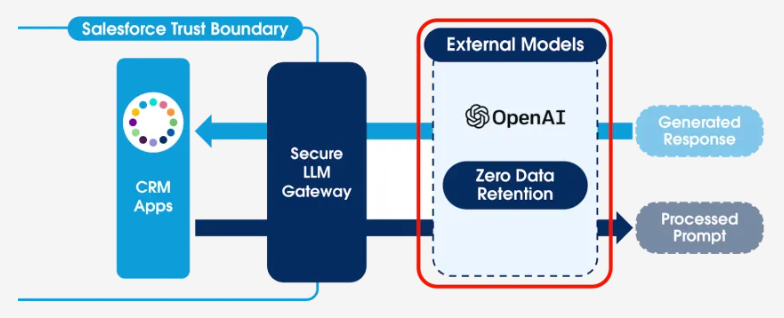

6. Secure LLM Gateway:

The Secure LLM Gateway is a protective mechanism within the Einstein Trust Layer that securely transmits prompts to external large language models (LLMs) while safeguarding customer data, ensuring compliance with zero data retention policies, and maintaining data security during AI interactions.

7. Zero Data Retention:

Source: Salesforce Trailhead

Zero Data Retention is a core security feature of the Einstein Trust Layer that ensures customer data is never stored or retained by LLMs used for generative AI processes.

When customer data, such as prompts or generated responses, is sent to an external LLM (like OpenAI), the model processes the information to generate the desired output. However, Salesforce’s Zero Data Retention policy requires the external LLM to immediately delete all data from these interactions after processing. This process ensures that the data cannot be stored, reused, or leveraged for training.

This policy eliminates concerns about data privacy, as sensitive customer information remains within the Salesforce Trust Boundary.

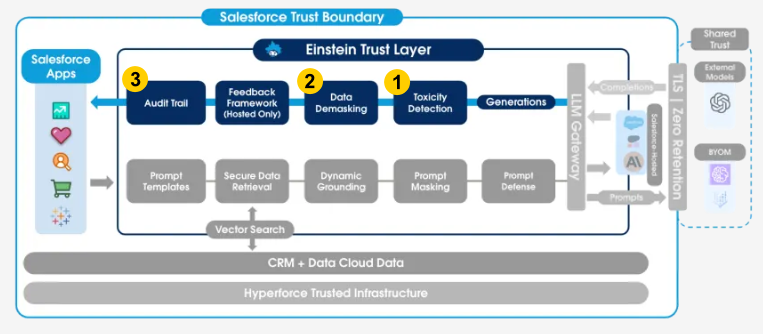

The Response Journey:

Source: Salesforce Trailhead

1. Toxic Language Detection:

The toxic Language Detection feature identifies and filters out harmful, offensive, or inappropriate content such as hate speech, violence, or profanity in generated AI responses, ensuring safe and respectful communication. Once the LLM generates a response, it is checked for harmful or inappropriate language using deep learning models.

2. Data Demasking:

Masked data is replaced with original customer details, making the response personal and relevant. In the Einstein Trust Layer, this process happens after a response is generated by an LLM and has passed through various security checks, such as toxic language detection.

For example, customer details like names or account numbers are initially masked before being sent to the LLM. Once the LLM generates a response, the Trust Layer demasks this data to the original customer details.

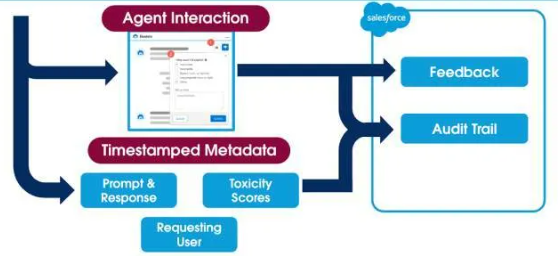

3. Audit Trail:

Source: Salesforce Trailhead

An audit trail in the Einstein Trust Layer is a detailed record of all activities and steps involved in the generative AI process, from the initial prompt to the final response. It provides a transparent record of all interactions, enabling organizations to monitor the process, resolve issues, and build user trust by showcasing secure and responsible AI practices.

Key Features:

- Comprehensive Logging:

Captures the original prompt, generated responses, toxic language scores, data masking/demasking events, and user feedback.

- Timestamped Metadata:

Every step in the process is recorded with accurate timestamps to provide a chronological sequence of events.

- Accountability:

Ensures compliance with data protection policies and demonstrates that customer data is handled securely and responsibly.

- Moderation Oversight:

Tracks toxic language detection and other safeguards applied during the process.

Every Salesforce generative AI solution undergoes this secure journey, ensuring trust, safety, and data protection for all users and customers.

Ready to unlock the full potential of Salesforce for your marketing strategy?

Subscribe to Newsletter

Get our latest blogs directly to your inbox.